MPI Operator是由kubeflow社区开发的,首先可以看看他们给的proposal文档https://github.com/kubeflow/community/blob/master/proposals/mpi-operator-proposal.md

之前我们使用过它来将模型训练任务部署到K8S中。这次我想来仔细看看它的实现原理。

回顾上次使用 MPIJob是新建的CRD,用来描述MPI training的任务,比如以下是一个模型训练任务:

MPIJob yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 apiVersion: kubeflow.org/v1 kind: MPIJob metadata: name: sw-simple-ml-gitlab namespace: mpi-operator spec: mpiReplicaSpecs: Launcher: replicas: 1 template: spec: containers: - args: - sleep 1m && mkdir simple_ml && cd simple_ml && horovodrun -np 2 --hostfile /etc/mpi/hostfile python main_with_horovod.py command: - "/bin/sh" - "-c" image: coreharbor.bdap.com/library/horovod-sw-base name: horovod-master Worker: replicas: 2 template: spec: containers: - args: - git -c http.sslVerify=false clone <https://gitlab.bdap.com/faraway/simple_ml.git> && cd simple_ml && pip install -r requirements.txt && chmod +x init.sh && ./init.sh && sleep infinity command: - "/bin/sh" - "-c" image: coreharbor.bdap.com/library/horovod-sw-base name: horovod-worker resources: limits: nvidia.com/gpu: 1 tolerations: - effect: NoSchedule key: gpu operator: Exists runPolicy: cleanPodPolicy: Running slotsPerWorker: 1

根据该MPIJob的内容,我们期望其Controller可以生成一个Launcher Pod与多个Workers Pods来执行任务,比如:

Launcher Pod(部分信息省略)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 apiVersion: v1 kind: Pod metadata: name: sw-simple-ml-gitlab-launcher spec: containers: - args: - sleep 1m && mkdir simple_ml && cd simple_ml && horovodrun -np 2 --hostfile /etc/mpi/hostfile python main_with_horovod.py command: - /bin/sh - -c env: - name: OMPI_MCA_plm_rsh_agent value: /etc/mpi/kubexec.sh - name: OMPI_MCA_orte_default_hostfile value: /etc/mpi/hostfile - name: NVIDIA_VISIBLE_DEVICES - name: NVIDIA_DRIVER_CAPABILITIES image: coreharbor.bdap.com/library/horovod-sw-base name: horovod-master volumeMounts: - mountPath: /opt/kube name: mpi-job-kubectl - mountPath: /etc/mpi name: mpi-job-config - mountPath: /var/run/secrets/kubernetes.io/serviceaccount name: kube-api-access-jf6hw readOnly: true initContainers: - env: - name: TARGET_DIR value: /opt/kube - name: NAMESPACE value: mpi-operator image: mpioperator/kubectl-delivery:latest imagePullPolicy: IfNotPresent name: kubectl-delivery resources: ... terminationMessagePath: /dev/termination-log terminationMessagePolicy: File volumeMounts: - mountPath: /opt/kube name: mpi-job-kubectl - mountPath: /etc/mpi name: mpi-job-config - mountPath: /var/run/secrets/kubernetes.io/serviceaccount name: kube-api-access-jf6hw readOnly: true restartPolicy: Never serviceAccountName: sw-simple-ml-gitlab-launcher volumes: - emptyDir: {}name: mpi-job-kubectl - configMap: defaultMode: 420 items: - key: kubexec.sh mode: 365 path: kubexec.sh - key: hostfile mode: 292 path: hostfile - key: discover_hosts.sh mode: 365 path: discover_hosts.sh name: sw-simple-ml-gitlab-config name: mpi-job-config - name: kube-api-access-jf6hw ...

Worker Pod(部分信息省略)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 apiVersion: v1 kind: Pod metadata: name: sw-simple-ml-gitlab-worker-0 spec: containers: - args: - git -c http.sslVerify=false clone <https://gitlab.bdap.com/faraway/simple_ml.git> && cd simple_ml && pip install -r requirements.txt && chmod +x init.sh && ./init.sh && sleep infinity command: - /bin/sh - -c image: coreharbor.bdap.com/library/horovod-sw-base imagePullPolicy: Always name: horovod-worker resources: limits: nvidia.com/gpu: "1" requests: nvidia.com/gpu: "1" volumeMounts: - mountPath: /etc/mpi name: mpi-job-config - mountPath: /var/run/secrets/kubernetes.io/serviceaccount name: kube-api-access-qw2jr readOnly: true tolerations: - effect: NoSchedule key: gpu operator: Exists volumes: - configMap: defaultMode: 420 items: - key: kubexec.sh mode: 365 path: kubexec.sh name: sw-simple-ml-gitlab-config name: mpi-job-config - name: kube-api-access-qw2jr ...

从生成的Pod来猜测Controller做了什么 首先Controller需要把MPIJob中的信息写入生成的Pod中。对于Worker Pod来说,就足够了,只需要等待Launcher发送命令。

而对于Launcher Pod来说,Controller还要为它做一些额外的事情,包括:

添加volumes,/etc/mpi挂载一个ConfigMap,其中hostfile内容为workers的名字。第二个是/opt/kube,挂载emptyDir,用于接收initContainer的信息。

新增一个initContainer,名为kubectl-delivery,可以看到有一个环境变量TARGET_DIR为/opt/kube,在具体看其实现之前,我们可以猜测它的作用是将一些东西放到/opt/kube下,以供主container使用。

kubectl-delivery 关键在于kubectl-delivery,现在我们通过代码,来具体看看kubectl-delivery的流程:

从Dockerfile 中看它的启动命令为cp /bin/kubectl /opt/kube/kubectl; /bin/kubectl-delivery -alsologtostderr,将kubectl文件cp到/opt/kube下

源码 ,解析/etc/mpi下的hostfile(由Controller生成,通过ConfigMap挂载),找到workers对应的Pod名字源码 ,在NewKubectlDeliveryController中,将这些Pod加入watchedPods列表源码 ,监听workers Pod,一旦正常运行则从watchedPods中删除源码 ,等待workers全部正常运行后,在/opt/kube下生成hosts文件,里面记录了workers和自己(通过/etc/hosts最后一行获取)的Pod名字与对应IP

因此,kubectl-delivery一共做了三件事:

等待Workers Pod就绪

生成hosts文件,包含了Launcher和Workers Pod的名字与IP(不过我后续发现这个似乎没有发挥作用)

拷贝kubectl可执行文件

kubectl与hosts文件都放在/opt/kube下,以供主container使用。

Launcher main container 但是问题来了。我们的Launcher主container中,是在哪里用了/opt/kube中的两个文件呢?

如果我们取消kubectl-delivery的执行,可以查看主container的报错:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 ssh not successful for host sw-simple-ml-gitlab-worker-1:shift exec sw-simple-ml-gitlab-worker-1 -- /bin/sh -c true for host sw-simple-ml-gitlab-worker-0:shift exec sw-simple-ml-gitlab-worker-0 -- /bin/sh -c true "/usr/local/bin/horovodrun" , line 8, in <module>"/usr/local/lib/python3.8/dist-packages/horovod/runner/launch.py" , line 773, in run_commandline"/usr/local/lib/python3.8/dist-packages/horovod/runner/launch.py" , line 763, in _runreturn _run_static(args)"/usr/local/lib/python3.8/dist-packages/horovod/runner/launch.py" , line 589, in _run_static'could not connect to some hosts via ssh' )

可以看到是由/etc/mpi/kubexec.sh调用,那么谁在调用kubexec.sh呢?

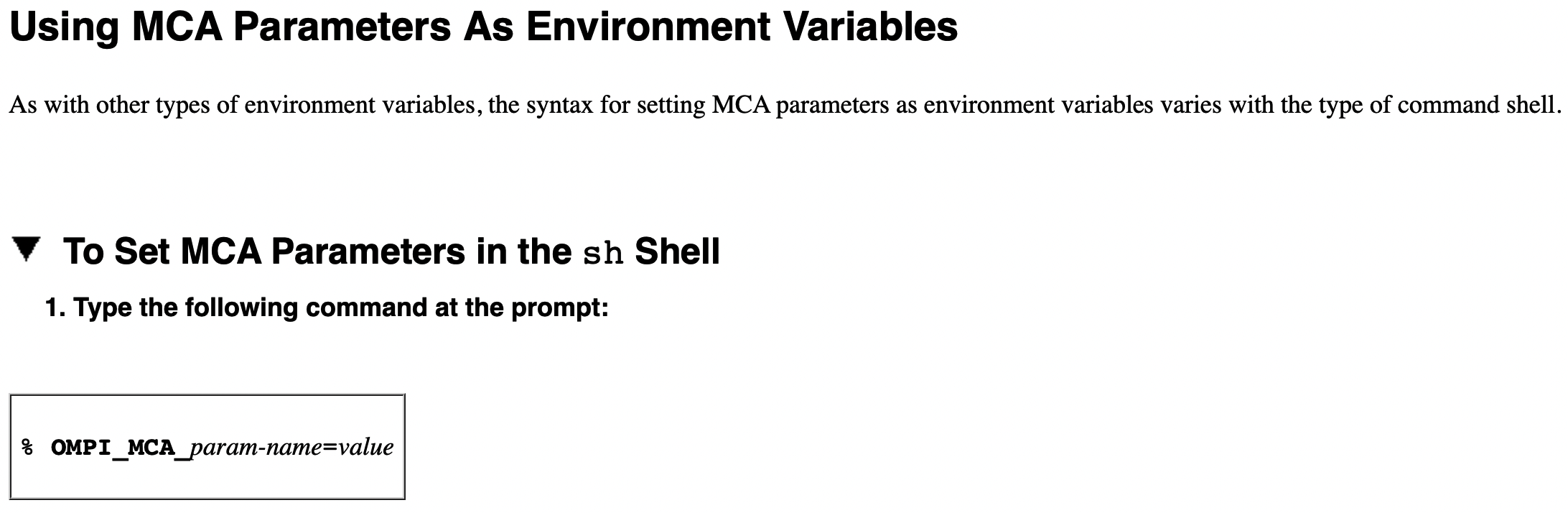

查看Launcher Pod可以发现我们有一个环境变量OMPI_MCA_plm_rsh_agent,因此合理猜想,会不会是mpirun在使用之前会用该环境变量对应的脚本来发现worker?

查看mpirun中MCA参数的文档,https://docs.oracle.com/cd/E19708-01/821-1319-10/mca-params.html

查找plm_rsh_agent参数的作用,https://www.open-mpi.org/faq/?category=rsh

我们发现这个参数是用来远程执行命令的连接方式前缀,取值一般为ssh或者rsh。

举例说明,比如我们想在服务器server-name执行ls somedir命令,则需要运行$OMPI_MCA_plm_rsh_agent server-name ls somedir

我们具体看看kubexec.sh

1 2 3 4 5 #!/bin/sh set -x$1 shift exec ${POD_NAME} -- /bin/sh -c "$*"

set -x显示脚本的执行过程

POD_NAME为接受的第一个参数,也即连接的服务器名字

通过shift让参数后移(移除了第一个POD_NAME参数)。

让kubectl exec来远程执行后面的命令

用kubectl exec的连接方式替换了ssh的连接方式(妙

还可以稍微在看一下运行的命令具体是什么,截取一部分(因为包含非常多不相干的环境变量

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 /opt/kube/kubectl exec sw-simple-ml-gitlab-worker-1 -- /bin/sh -ccd /simple_ml > /dev/null 2>&1 ;

其中HOROVOD_GLOO_RENDEZVOUS_ADDR=10.244.3.140正好是Launcher Pod的IP,说明Workers Pod以Launcher Pod为中心进行通信。

现在还有一个问题是,/opt/kube文件夹下的hosts似乎没有用到。我尝试将其删除(做法是将kubectl-delivery的命令改为cp /bin/kubectl /opt/kube/kubectl; /bin/kubectl-delivery -alsologtostderr; rm /opt/kube/hosts),也能正确执行任务。因为kubectl exec只需要知道Pod Name就行,不需要IP。以及Worker中的kubexec.sh也不需要挂载(可以由后续我添加的worker验证)。

实践 我们已经大概理解了流程,这里举个例子来验证,同时也看看会不会有什么遗漏。比如,如果我想增加一个worker,不用MPI Operator,不修改MPIJob,我应该如何手动写yaml实现?

创建一个Worker Pod,运行sleep infinity,我这里并没有挂载kubexec.sh以及hostfile

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 apiVersion: v1 kind: Pod metadata: name: add-worker namespace: mpi-operator spec: containers: - args: - git -c http.sslVerify=false clone <https://gitlab.bdap.com/faraway/simple_ml.git> && cd simple_ml && pip install -r requirements.txt && chmod +x init.sh && ./init.sh && sleep infinity command: - /bin/sh - -c image: coreharbor.bdap.com/library/horovod-sw-base imagePullPolicy: Always name: horovod-worker resources: limits: nvidia.com/gpu: "1" requests: nvidia.com/gpu: "1" dnsConfig: nameservers: - 10.105 .222 .6 tolerations: - effect: NoSchedule key: gpu operator: Exists

将该Pod的名字加入ConfigMap的hostfile中。注意要先把ConfigMap的ownerReferences删除。防止Controller覆盖我们的修改。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 apiVersion: v1 data: discover_hosts.sh: - echo sw-simple-ml-gitlab-worker-0:1 echo sw-simple-ml-gitlab-worker-1:1 hostfile: sw-simple-ml-gitlab-worker-0 slots=1 sw-simple-ml-gitlab-worker-1 slots=1 add-worker slots=1 kubexec.sh: - set -x POD_NAME=$1 shift /opt/kube/kubectl exec ${POD_NAME} -- /bin/sh -c "$*" kind: ConfigMap metadata: name: sw-simple-ml-gitlab-config namespace: mpi-operator

新建一个Launcher Pod,并将运行的np改为3

不过发现出错,应该是权限问题。

1 2 3 Error from server (Forbidden): pods "add-worker" is forbidden:"system:serviceaccount:mpi-operator:sw-simple-ml-gitlab-launcher" "pods/exec" in API group "" in the namespace "mpi-operator"

需要修改RBAC中的Role,添加resourceNames,顺便去掉ownerReferences防止operator覆盖

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: name: sw-simple-ml-gitlab-launcher namespace: mpi-operator rules: - apiGroups: - "" resources: - pods verbs: - get - list - watch - apiGroups: - "" resourceNames: - sw-simple-ml-gitlab-worker-0 - sw-simple-ml-gitlab-worker-1 - add-worker resources: - pods/exec verbs: - create

总结 controller做的事情:

根据MPIJob请求的workers的数量,生成其Pod名字,存储到ConfigMap中,事先写好kubexec.sh脚本

从MPIJob请求中提取workers Pod信息生成workers,应该没有其他额外操作了

从MPIJob请求中提取launcher Pod信息生成launcher

给Launcher添加initContainer,它们两个共享/opt/kube

将ConfigMap挂载到/etc/mpi,添加环境变量以供mpirun调用/etc/mpi/kubexec.sh

给Launcher Pod添加ServiceAccount,并给其添加exec workers pod的权限